Hi! I’m going to show you how to build a web crawler in php to search all the links from a particular website - and create a downloadable csv file containing those links. Web Crawlers are bots that are used to search for information from websites by scraping their HTML content. These are the same things used by giant search engines like Google, Yahoo and Bing to find and index fresh contents on the web.

Usually these search engines employ their own bots running 24/7 round the clock and search for new contents to update their huge database. This is the reason you are able to Google literally for any sort of queries and get the answer.

Simple PHP Web Crawler Example

Building a crawler like Big G to scan the whole web will take much time and effort but the underlying concept is same. The simple php web crawler we are going to build will scan for a single webpage and returns its entire links as a csv (comma separated values) file.

You Need Simple HTML DOM Parser Library

In order to crawl a webpage you have to parse through its html content. Though html parsing can be done with good old javascript, using a DOM parser will make our life easier as a developer. So here I’m going to use Simple HTML DOM Parser library. First go to this link and download the library. Extract its contents and move the file ‘simple_html_dom.php’ to your working folder. Now you can use the dom parser by simply including this file in your php crawler script like this.

<?php include_once("simple_html_dom.php"); ?>

Building the Basic Web Crawler in PHP

Before we jump into building the crawler we have to take few things into consideration. The webpage we scrape may contain duplicate links. To avoid repeated links, first we have to stack up all the scraped links in an array and should eliminate duplicated links from the list at the end.

Here is the php script for our basic website crawler.

Crawler.php

<?php

include_once("simple_html_dom.php");

// set target url to crawl

$url = "http://www.example.com/"; // change this

// open the web page

$html = new simple_html_dom();

$html->load_file($url);

// array to store scraped links

$links = array();

// crawl the webpage for links

foreach($html->find("a") as $link){

array_push($links, $link->href);

}

// remove duplicates from the links array

$links = array_unique($links);

// set output headers to download file

header("Content-Type: text/csv; charset=utf-8");

header("Content-Disposition: attachment; filename=links.csv");

// set file handler to output stream

$output = fopen("php://output", "w");

// output the scraped links

fputcsv($output, $links, "\n");

?>

- The

$urlvariable contains the target url to scrape. This can be any valid remote url. - Also the condition we have used in the

foreachstatement$html->find("a")will search for any<anchor>links and store the value ofhrefattribute in the $links array. - The function

array_unique()removes duplicate items in the given array. - Also setting the output headers will make the crawler to create a downloadable csv file containing the scraped links from the website.

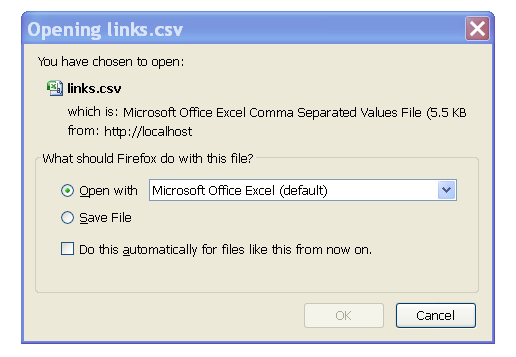

Done! We have created a basic web crawler in php. Change the $url variable to the target url you want to crawl and run the script in the browser. You will get a csv downloadable file like this,

Likewise you can build a simple php web crawler. This is nothing serious but does the job. If you are serious about developing crawlers I hope this little tutorial has laid out some foundation :)